“X explains Z% of the variance in Y” by Leon Lang

Manage episode 491290461 series 3364758

תוכן מסופק על ידי LessWrong. כל תוכן הפודקאסטים כולל פרקים, גרפיקה ותיאורי פודקאסטים מועלים ומסופקים ישירות על ידי LessWrong או שותף פלטפורמת הפודקאסט שלהם. אם אתה מאמין שמישהו משתמש ביצירה שלך המוגנת בזכויות יוצרים ללא רשותך, אתה יכול לעקוב אחר התהליך המתואר כאן https://he.player.fm/legal.

Audio note: this article contains 218 uses of latex notation, so the narration may be difficult to follow. There's a link to the original text in the episode description.

Recently, in a group chat with friends, someone posted this Lesswrong post and quoted:

The group consensus on somebody's attractiveness accounted for roughly 60% of the variance in people's perceptions of the person's relative attractiveness.

I answered that, embarrassingly, even after reading Spencer Greenberg's tweets for years, I don't actually know what it means when one says:

_X_ explains _p_ of the variance in _Y_.[1]

What followed was a vigorous discussion about the correct definition, and several links to external sources like Wikipedia. Sadly, it seems to me that all online explanations (e.g. on Wikipedia here and here), while precise, seem philosophically wrong since they confuse the platonic concept of explained variance with the variance explained by [...]

---

Outline:

(02:38) Definitions

(02:41) The verbal definition

(05:51) The mathematical definition

(09:29) How to approximate _1 - p_

(09:41) When you have lots of data

(10:45) When you have less data: Regression

(12:59) Examples

(13:23) Dependence on the regression model

(14:59) When you have incomplete data: Twin studies

(17:11) Conclusion

The original text contained 6 footnotes which were omitted from this narration.

---

First published:

June 20th, 2025

Source:

https://www.lesswrong.com/posts/E3nsbq2tiBv6GLqjB/x-explains-z-of-the-variance-in-y

---

Narrated by TYPE III AUDIO.

---

…

continue reading

Recently, in a group chat with friends, someone posted this Lesswrong post and quoted:

The group consensus on somebody's attractiveness accounted for roughly 60% of the variance in people's perceptions of the person's relative attractiveness.

I answered that, embarrassingly, even after reading Spencer Greenberg's tweets for years, I don't actually know what it means when one says:

_X_ explains _p_ of the variance in _Y_.[1]

What followed was a vigorous discussion about the correct definition, and several links to external sources like Wikipedia. Sadly, it seems to me that all online explanations (e.g. on Wikipedia here and here), while precise, seem philosophically wrong since they confuse the platonic concept of explained variance with the variance explained by [...]

---

Outline:

(02:38) Definitions

(02:41) The verbal definition

(05:51) The mathematical definition

(09:29) How to approximate _1 - p_

(09:41) When you have lots of data

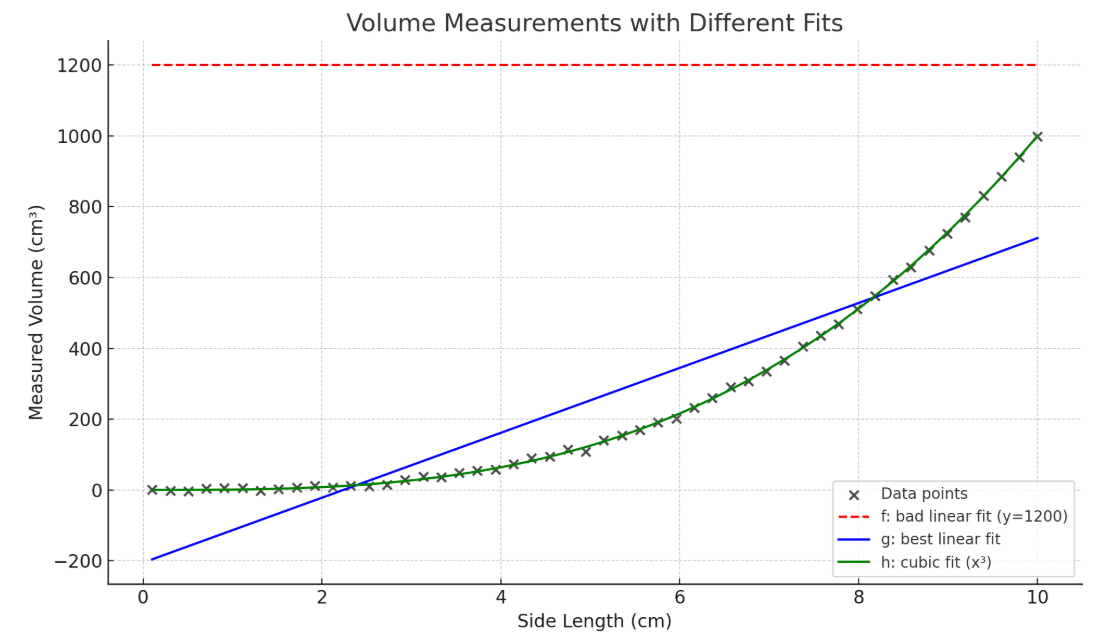

(10:45) When you have less data: Regression

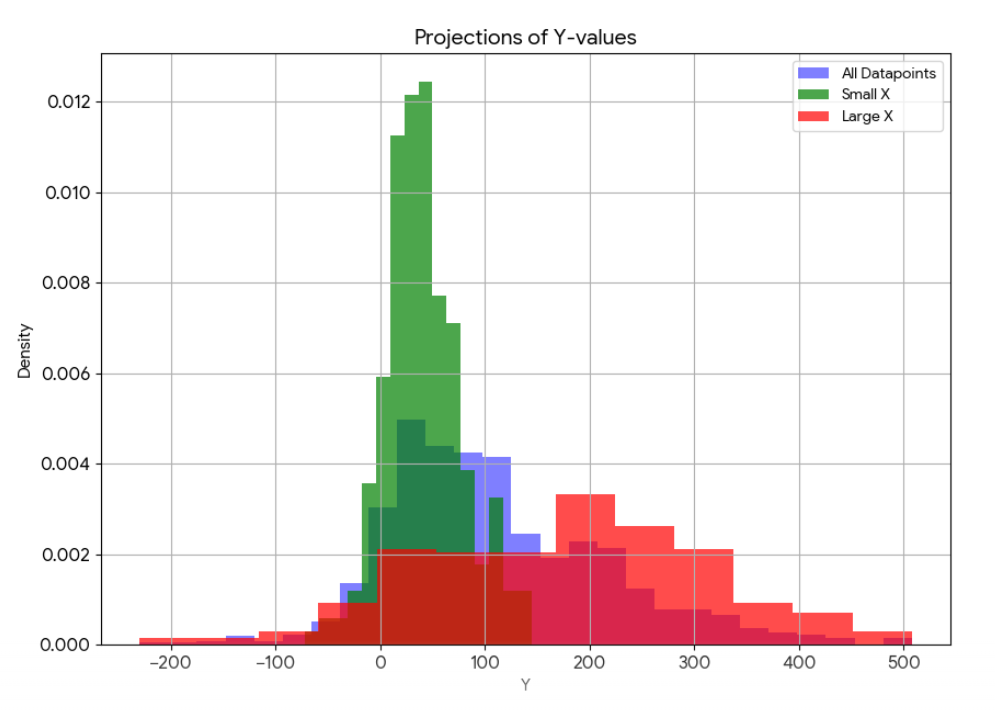

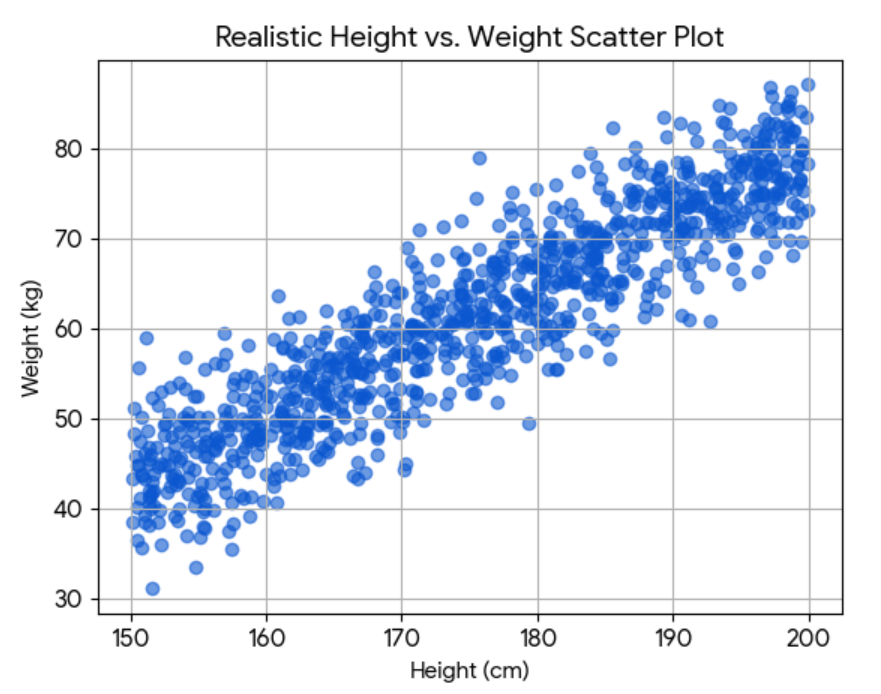

(12:59) Examples

(13:23) Dependence on the regression model

(14:59) When you have incomplete data: Twin studies

(17:11) Conclusion

The original text contained 6 footnotes which were omitted from this narration.

---

First published:

June 20th, 2025

Source:

https://www.lesswrong.com/posts/E3nsbq2tiBv6GLqjB/x-explains-z-of-the-variance-in-y

---

Narrated by TYPE III AUDIO.

---

701 פרקים